When you connect your VM to NordVPN a new Network device is created (tun). Now, in GCP (normally) all VM have only 1 vNIC and is through that vNIC that all traffic is being routed. In you can find step by step how to access your VM using the serial console through your Google Cloud Platform panel. You best chance to know what’s going on inside your VM once the network access is not available, as you described, is to interact with the Serial Console. It’s seems that the VPN Client is receiving network routes from your VPN Provider so the VM is routing all traffic through the VPN so all inbound connections are being dropped. No doubt I believe this situation is possible but I cannot understand that further settings I have to enter with respect to the local VM. My situation is instead that the VM is the client and the VPN server is external. I only find material online to create a VPN server within GCP but it is not my case.

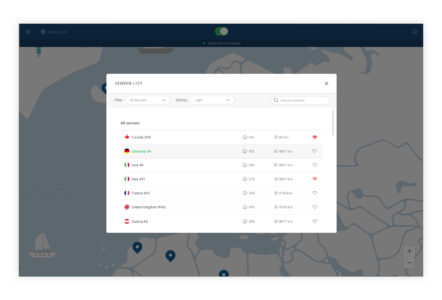

The test was done both with the IP Forwarding enabled and disabled at the time the VM was created. Premise that I am not a networking master. I can no longer ping it from both the internal address and the external address. This problem does not occur if I use a local VM instead. I installed the client (the test was done both with the custom client of the vendor, and with openVPN with the list of the vendor's servers) but when I go to connect between the VM and the VPN I lose control of the machine, the terminal hangs. Now I find myself for a project to use it on different VM Debian 9 machines in Google Cloud. I have an active subscription with NordVPN and I have always used this VPN without problems, both from Windows, from Mobile, and from Linux on-premises virtual machines. Lose control of the VM Instance Debian 9 in Google Compute Engine when I try to connect to a VPN Service Provider (NordVPN).

0 kommentar(er)

0 kommentar(er)